Journey Into Unreal Engine 4's AI Perception

April 5, 2020

Unreal Engine 4 has always touted strong AI-reaction capabilities using Behavior Trees and Blackboards. But there was never an obvious choice for AI-perception, with multiple competing offerings - including the PawnSensingComponent, trace tasks, and the Environmental Query System (EQS) - and no clear winner. The AIPerceptionComponent is the latest entry in the race, and so far it seems to be fairly robust. In this article, I discuss my initial thoughts about the AI Perception system.

Initialize the AI Perception Component

The AI Perception component appears to be out of the "experimental" phase for several versions now, so there is already some scaffolding built into the AAIController for it. There is a public pointer for the UAIPerceptionComponent already in the base AAIController.

UPROPERTY(VisibleDefaultsOnly, Category = AI)

UAIPerceptionComponent* PerceptionComponent;However, the component is not initialized by default. Let's change that. Derive a new controller class from AAIController - call it whatever you want (going forward, CodeController). Then, in the constructor for your new class, initialize the built-in pointer with a new AI Perception component.

ACustomAIController::ACustomAIController()

{

PerceptionComponent = CreateDefaultSubobject<UAIPerceptionComponent>(TEXT("Perception"));

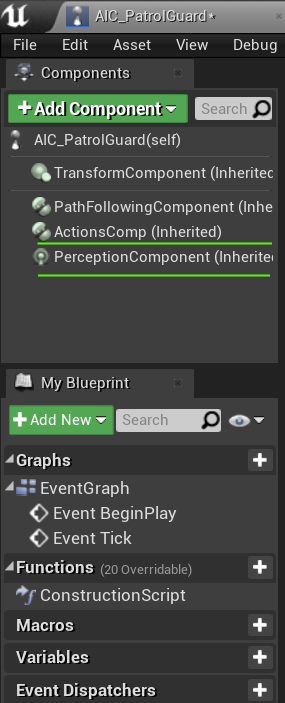

}Our AI Perception component is now initialized. Confirm this by jumping into the Editor, deriving a Blueprint from your custom controller class (going forward, BPController), and taking a look at the Components tab. You should see our new Perception component in there:

Pick a Sense to Perceive

Now that we have an initialized AI Perception component, we need to pick a sense to perceive. Because it's the easiest to understand, we'll start with Sight. When you get the basics down, you can extend the same principles to perceive other pre-defined senses including Hearing, Touch, and Damage (or create your own).

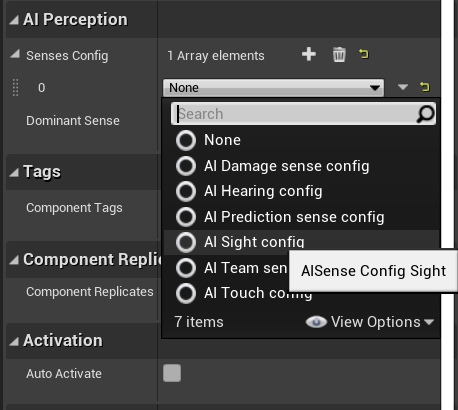

In BPController, select Perception from the Components tab. In the Perception component's details, add an item to the array SensesConfig and pick AI Sight config.

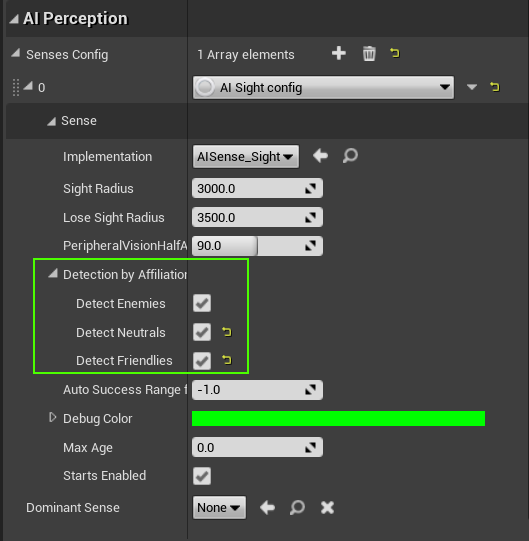

The only setting we'll change is the the affiliation scope. At this time, we can only change affiliation (i.e. team) in C++, so we will work around by allowing our AI Perception to see actors on all teams. Check every box under Detection by Affiliation. We may need to configure some other Sight sense settings down the line, but for now we'll leave everything else at default.

Connect Perception to a Pawn

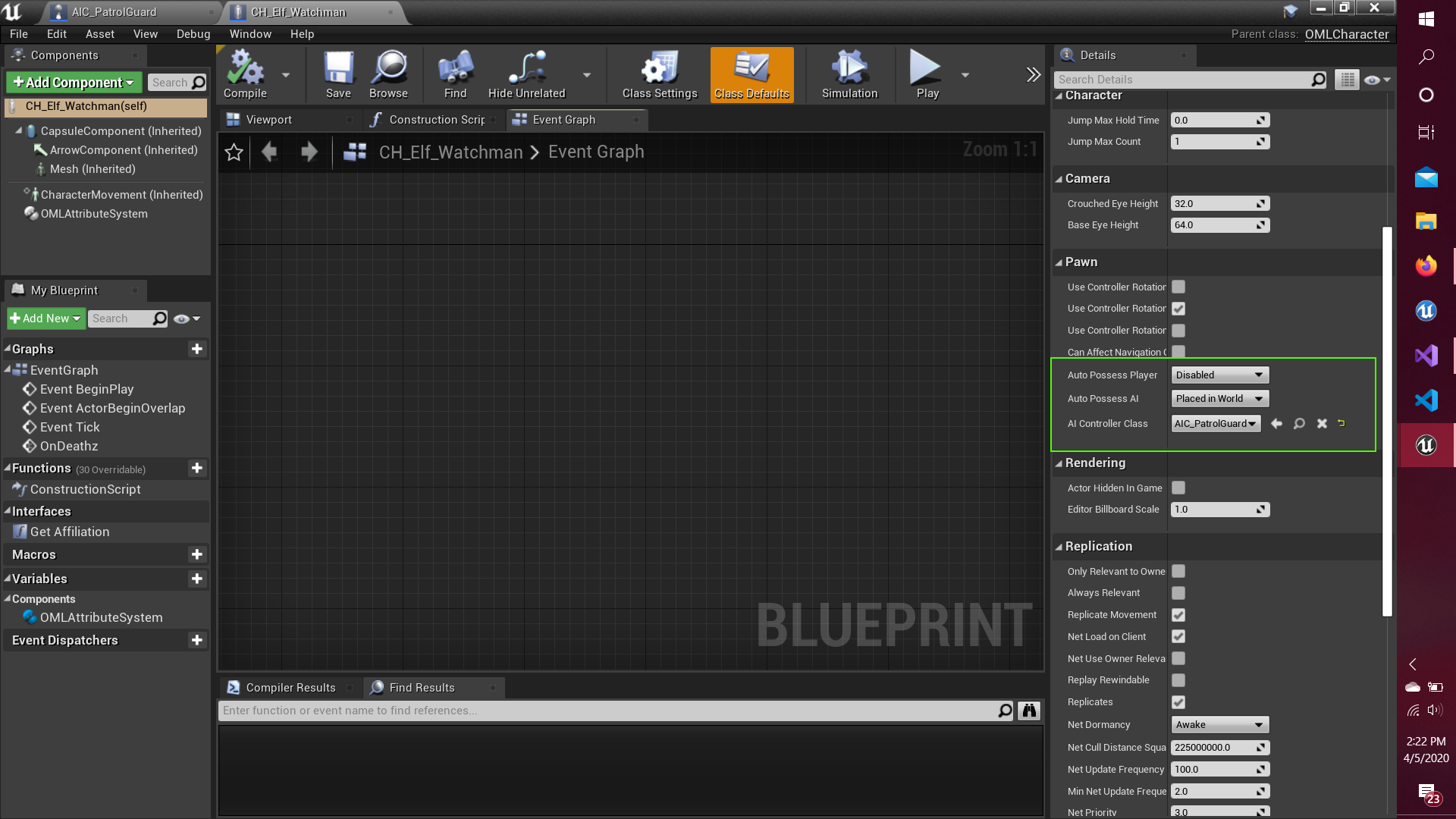

Our CodeController is ready to go, but it needs a host in the game world. My instinct is that the settings we configured on the AI Perception component (sight distance, perception arc, etc.) will piggyback off of the CodeController's possessed pawn - for example, perception arc will depend on the forward angle of the possessed pawn. Pick the pawn of your choice (I'm picking a watchman character), and set the pawn to be controlled by CodeController. And with that, our AI Perception is ready to perceive the world.

Add Stimuli

Now we need to give our watchman something to see. All stimulus comes from the AI Perception Stimuli Source component. Add one to the actor of your choice. I will start with my main player character, but any actor can be sensed (even if it isn't visible!).

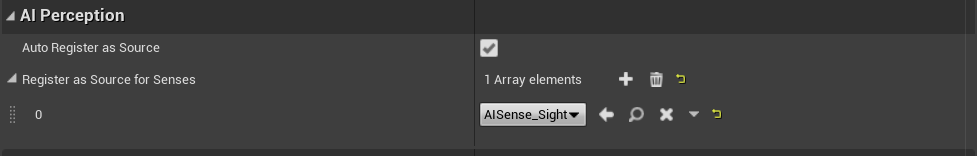

Then configure it. Make sure that Auto Register as Source is checked, and add Sight to the list of senses this source will stimulate. You might notice that one source can stimulate multiple senses, as it does in real life. For example, a campfire might stimulate sight (by shedding light on surroundings), sound (the crackle of burning logs), smell (smoky), and touch (emanating warmth).

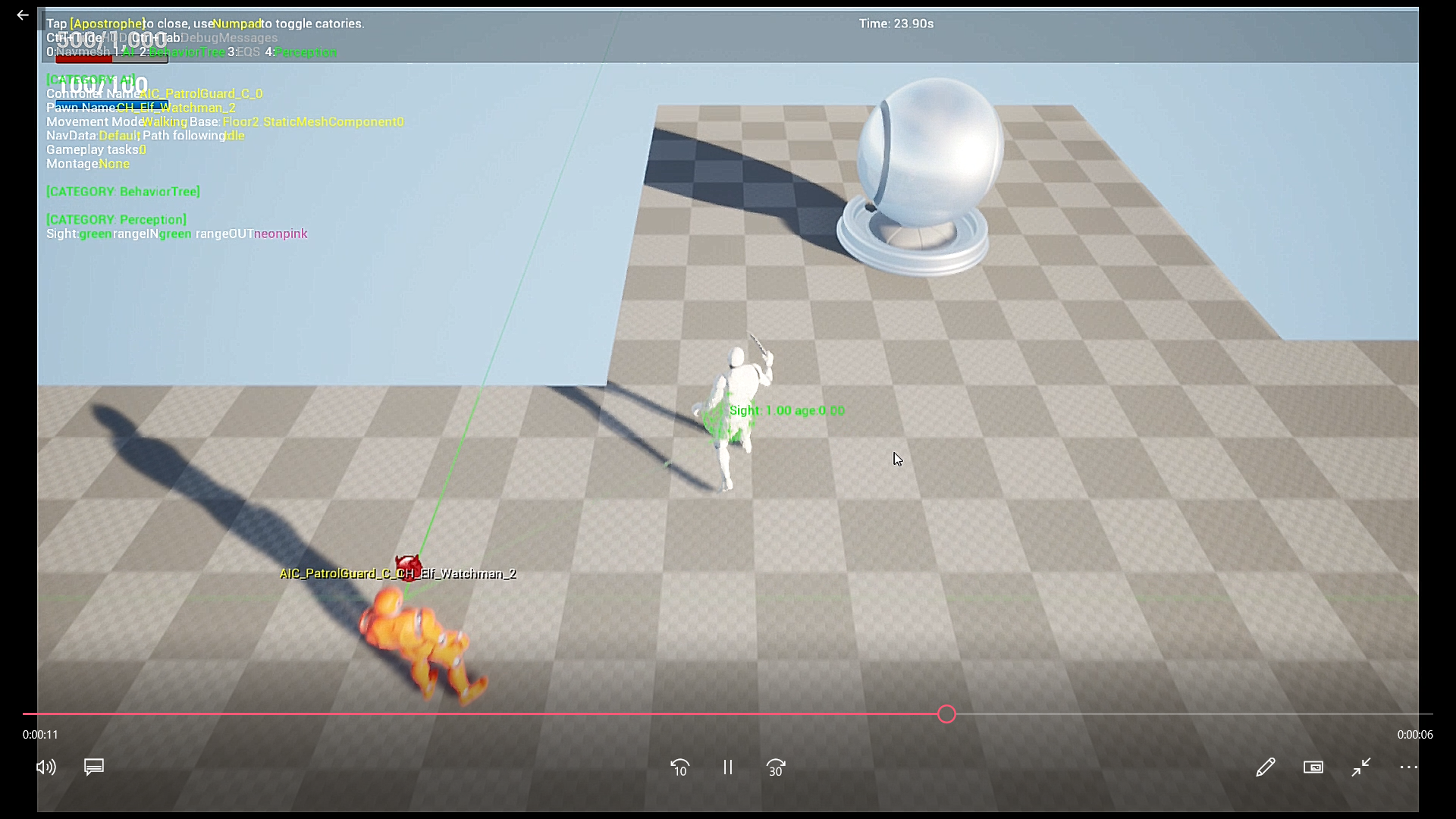

Enable Debugging and Confirm that Stimuli Is Received

The AI Perception system comes with a powerful debuging overlay, but it is disabled by default. You can enable it by going to Project Settings -> Engine -> AI System and checking Enable Debugger Plugin.

To activate the overlay in-game, click the apostrophe key ' to open up the AI debugger and then four on the numpad 4 to switch to AI Perception debugging.

If everything went well, we should see our AI perceive our character when it walks by.